Securing your remote work environment

It’s probably safe to say that if you are able to work remotely right now, you are doing just that. Aside from concerns like uncomfortable chairs, not enough monitors, and desks that seem to be at the wrong height no matter what you do, it’s likely that your home network wasn’t set up for long-term remote work in mind. Home networks don’t have the same security controls that most corporate networks have, but there are still things you can do in order to make your remote work environment as secure as possible.

Change Default Passwords

Many attacks against home networking devices or Internet-of-Things (IoT) devices rely on the fact that many people don’t change default passwords. Changing all device passwords after setup to a complex, unshared password is the first step in protecting your network from attacks. Since most of these passwords only need to be used occasionally, this is a great use case for a password manager that generates long passphrases that are almost impossible to guess.

Check your router configuration

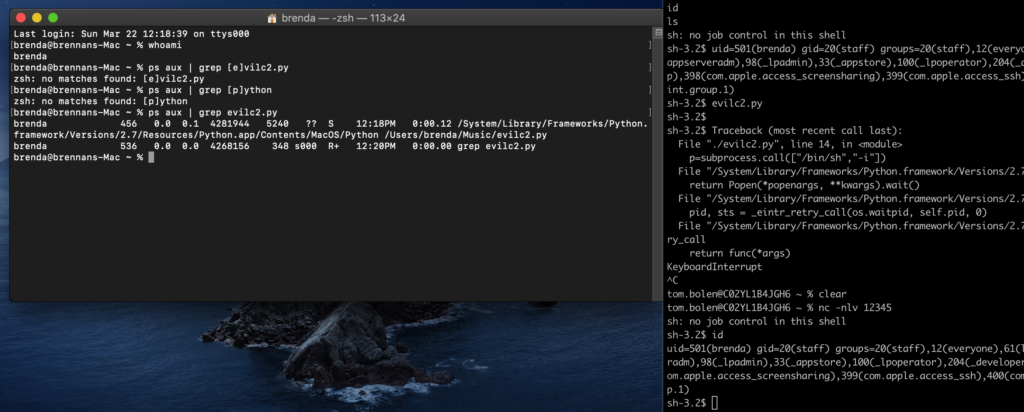

By default, most home routers are configured to only let traffic out to the broader internet, not allow traffic into your home network from the outside. That default configuration is best from a security standpoint, but some services may need to be able to reach into your network. This is commonly achieved by enabling port forwarding, and although it’s not inherently insecure, misconfigurations can introduce security gaps. It’s a good idea to check your router configuration and remove any unnecessary configuration options like unused port forwards, remote administration, and Universal Plug and Play (UPnP).

A useful tool for checking whether your router is accessible to the internet is to use a scanning tool like Shields Up! (https://www.grc.com/x/ne.dll?bh0bkyd2) which scans your router for open ports that could represent a security risk.

Patch all devices

While it is pretty easy to keep on top of installing OS updates on your endpoint because most systems automatically apply patches these days, the same is not true for network devices like routers, access points, and so on. It’s even less true for many IoT devices, since those devices can be incredibly hard to update even if updates are available. It’s still important to try and keep all your devices that reside on your network as up to date as possible, to prevent exploitation of known security vulnerabilities.

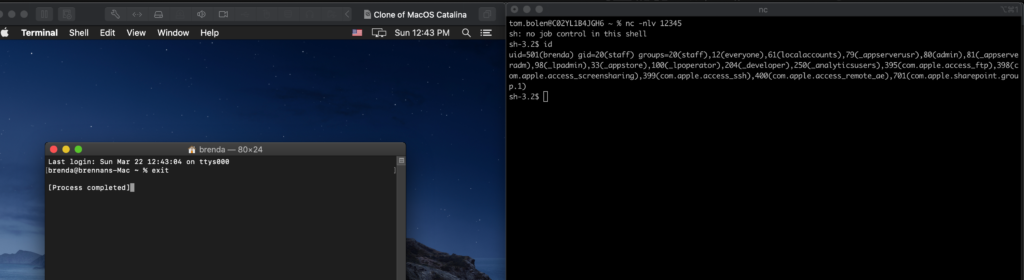

Create a separate network for IoT devices

IoT devices are a prime target for hackers because they are so hard to patch and many people don’t even consider that they can represent a security risk. Because they are such a big target, consider creating a separate network entirely for these kinds of devices to minimize the damage that could be done if they do get compromised. Some home routers allow you to easily configure multiple networks so you can keep trusted and untrusted devices separate, but in many cases in order to completely segregate networks it requires purchasing multiple wireless access points or more expensive routers. Nevertheless, if you have the interest and technical skills to do this, it can be an effective countermeasure.

Treat your business devices appropriately

Just because you are on your work laptop at home doesn’t mean it has turned into your personal laptop! Always be mindful of what you are doing on your device, and don’t give in to the temptation to lower your guard because you are in a comfortable, less business-like setting. It’s even more important to not open suspicious emails or documents when working remotely because some of the security controls that may offer protection while on your corporate network aren’t at home. Also keep in mind that this applies to personal accounts you access on your corporate device. Better to be safe than sorry!

Use ethernet whenever possible

This is not so much of a security tip, as it is a performance tip, Today with so many of us (and our neighbors) working from home, the 2.4ghz and 5 ghz spectrum in which wifi operates is more congested than ever. Ethernet doesn’t have this problem so for the most reliable, and secure network performance use wired ethernet.

Disable insecure wifi modes

The problem with wireless is that your wireless network and the band that it’s on is its own collision domain. Once someone gets on your network they can listen to all other devices sending traffic on your network. So in order to make that as difficult as possible disable network modes such as WPS and in general use the highest mode of encryption that your router supports such as WPAWPA2-PSK or WPA2-PSK (AES).

With these tips in mind, you can make sure that “working remotely” doesn’t turn into “exploited remotely”.