Who’s watching your VirusTotal submissions?

Phishing testing is often a part of a company’s security training, and conducting frequent phishing tests is part of our security program here at Code42. As part of those tests, we monitor both click rates and reporting rates, as we consistently message our employees to report any suspicious-looking emails to the security team. So when the most recent phishing test report from our vendor KnowBe4 included three clicks from IPs in China, it resulted in an investigation that ultimately uncovered some interesting consequences of using public security scanners.

All three users said that they hadn’t clicked on the link in the phishing test, and all three did report the email as expected. Although having a user click on a link after they submit it as suspicious is possible, it’s not a typical behavior pattern that we see. Plus, due to the security culture at Code42, most users willingly own up to clicking on links in emails, so we really had no reason to doubt that the users weren’t being honest when they said they didn’t click on anything. That did present a puzzle, however: how could a personalized link from KnowBe4 that exists only in the phishing test email make its way to some IP address in China, and was that indicative of something malicious? Seeing no immediate answers, the SOC team started digging in.

When coming up with possible explanations for how this URL could end up outside of the user’s account, we brainstormed several threat vectors:

- The obvious one is malware, in this case a type of malware on the endpoint that scrapes URLs in emails or otherwise provides remote access that could be leveraged for extracting data from an email account. That’s not impossible, but the thought of somebody leveraging such access to grab a URL from an email and not, say, deploy ransomware seemed unlikely.

- A fake OAuth application: these are apps that look legitimate and connect to your online accounts via OAuth, requesting permissions like reading your email in your Office365 or Google accounts. These are becoming more and more prevalent so this was a serious option to consider.

- Another possibility was a malicious browser extension that could grab data from a Google Mail page. This is also becoming an issue that security teams need to keep in mind as part of their threat model.

- Finally, we considered that our own security tools or processes may have triggered some unintended side effects that led to this behavior.

It turned out that the last one was the culprit! But before moving onto the explanation, a few words about investigating the other options. An EDR tool would be most useful for investigating the malware scenario. Viewing connected apps in your cloud provider’s admin console is one way to try and find suspicious OAuth apps, as is monitoring your log event stream and capturing all new OAuth permission adds as they happen. As for browser extensions, a tool like OSQuery can be used to enumerate extensions and help identify any that look odd.

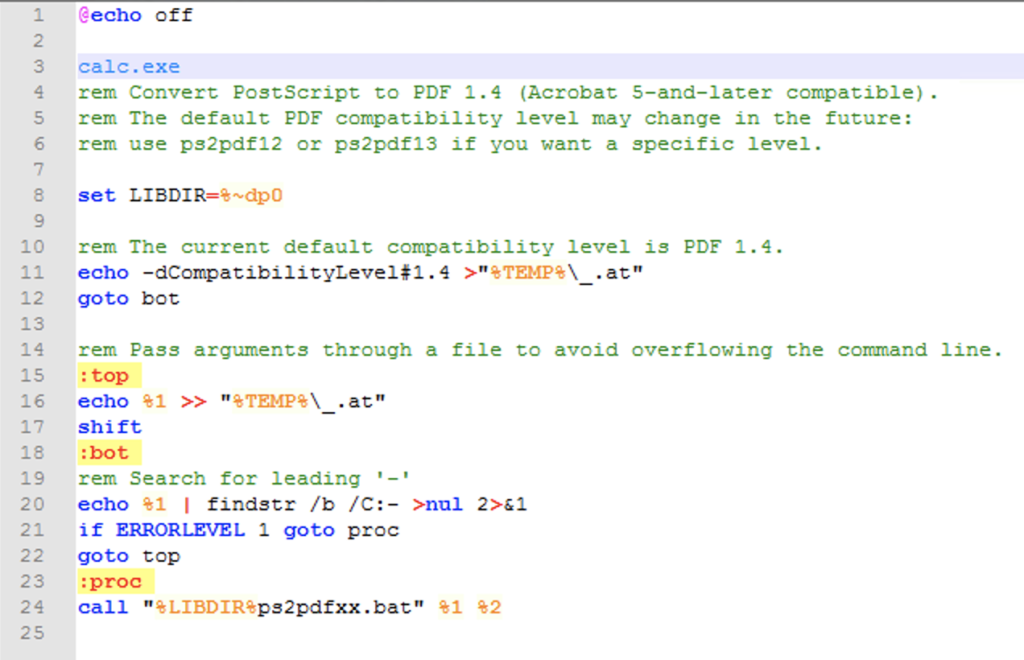

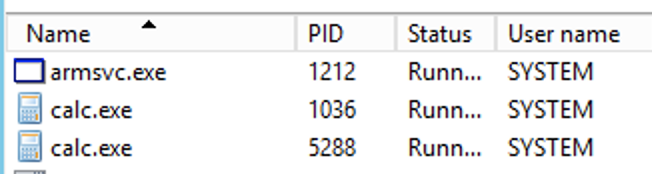

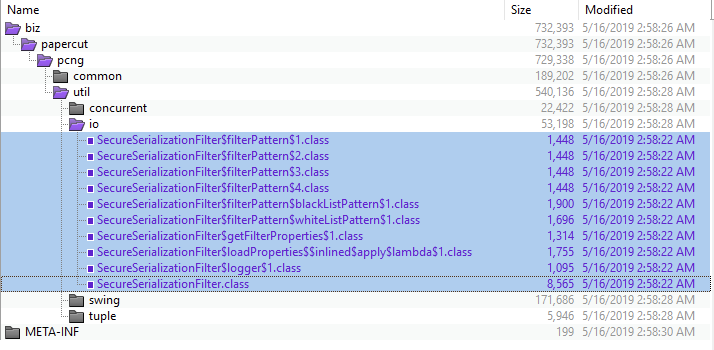

But in this case, it was our own security tools that led to the odd clicks. At Code42, we use Palo Alto Cortex XSOAR as our SOAR platform, and one of our key automation playbooks is handling emails that users send to our SOC team. We build into that playbook an automated response for people who submit phishing tests thanking them for the submission and keeping track of who reported the email for those aforementioned metrics. However, sometimes the email is forwarded in such a way that the playbook logic can’t automatically determine that it is part of a phishing exercise. When that happens, it goes through the normal investigation workflow, including sending URLs to services like urlscan.io and VirusTotal. Ultimately, it was determined that it was the latter service that led to the recorded click event, but how did we get there?

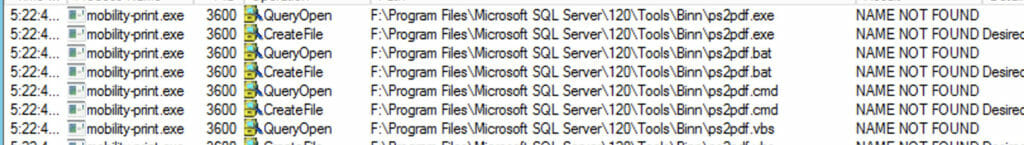

First, we started with a standard tactic: seeing if those suspicious IPs were seen anywhere else besides the URL click. When we looked for other traffic from those IPs in our logs, we did find a few events. They were to URLs on webservers that we control and hence log, and they were largely pretty innocuous events: HTTP requests to static pages, support articles, and so forth. Digging into one particularly personalized URL, though, we found that not only had the suspicious IP visited it, but so had a number of other IPs, including IPs from Google, DigitalOcean, and Palo Alto Networks. Taking a close look at the user agents for some of those events uncovered that the sequence of events always appeared to start with a Google IP, one that included “appid: s~virustotalcloud” in the user agent string. Once we saw this, things began to fall into place.

We discovered a pretty consistent pattern: the VirusTotal HTTP request came first, then over a period of 24–36 hours, other IPs would make HTTP requests to the same URL. For some of these URLs, they were very long and had arbitrary data added, so the only logical source could have been the original VirusTotal HTTP request. In other words, it looked like organizations were ingesting all VirusTotal URL submissions via API and visiting those URLs themselves to (likely) do their own analysis.

For some of the source IPs, this explanation made a lot of sense: VirusTotal does have a robust API, including a feed of all submitted URLs. Other security vendors use this data as an input into their own tooling to add additional context. But some of the traffic indicated that unknown non-security actors were doing the same thing.

At least, that was the hypothesis we had put together. The next step was to test it, and so we generated a fake, easily-trackable URL that was on a domain we controlled. We submitted it to VirusTotal and sat back to wait for the results. And sure enough, we saw the same pattern once again:

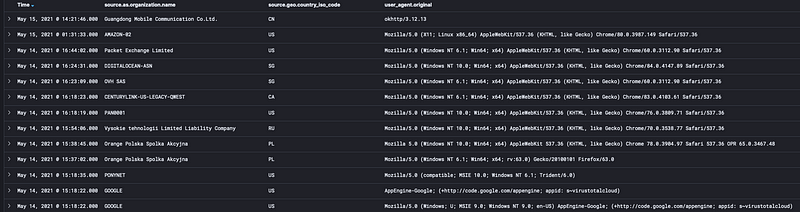

The first HTTP requests came from VirusTotal. As before, Palo Alto, Digital Ocean, and AWS showed up. But so did curious networks like “Orange Polska Spolka Akcyjna” and “Vysokie tehnologii Limited Liability Company”. Finally, at the end, the network we saw in our phishing exercise, “Guangdong Mobile Communication Co.Ltd.” appeared as if on schedule. That traffic consistently has a user agent string of “okhttp/3.12.13”, which also matched up with the phishing reporting dashboard “Generic Browser” data point for the browser that registered the phishing link click.

In the end, we felt our hypothesis was confirmed and that the clicks were neither user-initiated or malicious. We also followed up with KnowBe4 and learned that we can remove those non-user-initiated clicks to ensure that our reporting is 100% accurate. But it served as a great reminder that when you use a tool like VirusTotal as part of your investigation, you don’t control who sees what you are submitting, and they may decide to take their own look at what you are sharing. More importantly, when you see strange activity in a phishing exercise, remember to “assume breach” but realize there are other explanations out there too!